MongoDB EC2 SSD x IOP provisioned x EBS optimized instance comparison

As our old plain 8 EBS Volumes RAID10 array were getting too busy - with %lock close to 60% which is the maximum acceptable in our experience. We put a couple of High I/O SSD-based EC2 instances ( hi.4xlarge ). And they were very fast but they were not perfect, that´s because their storage is instance based so they lack durability and we were not able to snapshot them. But a few days ago we heard about the new EBS-Optimized instances and the Provisioned IOP Volumes. It sounds confusing and it is:

In my view, the EBS-Optimized instances adds a new network interface giving you an extra 500 Mbps of bandwidth just to talk to EBS volumes. And the Provisioned IOP Volumes are like regular EBS, but they sell you with IOP rather than just space.

To get an EBS-Optimized instance, you have to choose this option at the console. Or you have to stop the instance and then change an attribute on them at the command line:

#ec2-modify-instance-attribute instance_id --ebs-optimized true

BEWARE: that operation will change the DNS on that instance. So expect to have a downtime on your replicaset and don´t forget to change either your Replicaset configuration OR your DNS record to reflect this change.

To use the Provisioned IOP Volumes, you have to choose how much IOP you want provisioned in each new EBS volume respecting the rule of having at least 1/10 of the provisioned IOP in GB of data in each volume. If you want 1000 IOPs ( which is the maximum ), you need at least 100 GB of volume.

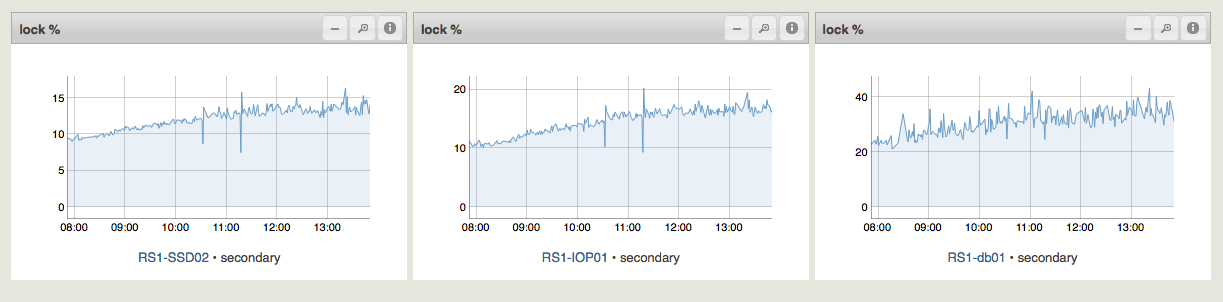

The test was to see how they compare at the same role, so they all are Secondary nodes of the same Replica Set on Production, at 700 updates/sec and 100 inserts/sec on average during the tests.

The setup was:

On the plain EBS -- with ebs-optimized=true ( RS1-DB01 )

instance type: m2.4xlarge

8 volumes mounted on /var/lib/mongodb

( journaling turned off )

on the IOP provisioned EBS ( RS1-IOP01 )

instance type: m2.4xlarge

8 volumes with 1000 iop each in RAID10 mounted on /var/lib/mongodb

1 volume with 1000 iop mounted on /var/lib/mongodb/journal

On the SSD-based instance ( RS1-SSD02 )

instance type: hi1.4xlarge

1 Volume mounted on /var/lib/mongodb

1 Volume mounted on /var/lib/monogdb/journal

These pictures show how they perform and why we will still continue to use SSD backed instances for now but will keep on IOP provisioned for snapshots.

It is possible that this performance difference happens because of network latency - the hi.4xlarge have 10Gb network interfaces instead of 1Gb. But our next steps are putting those collections in shards and we should be fine with these until that happens in a week or two ...